Examples

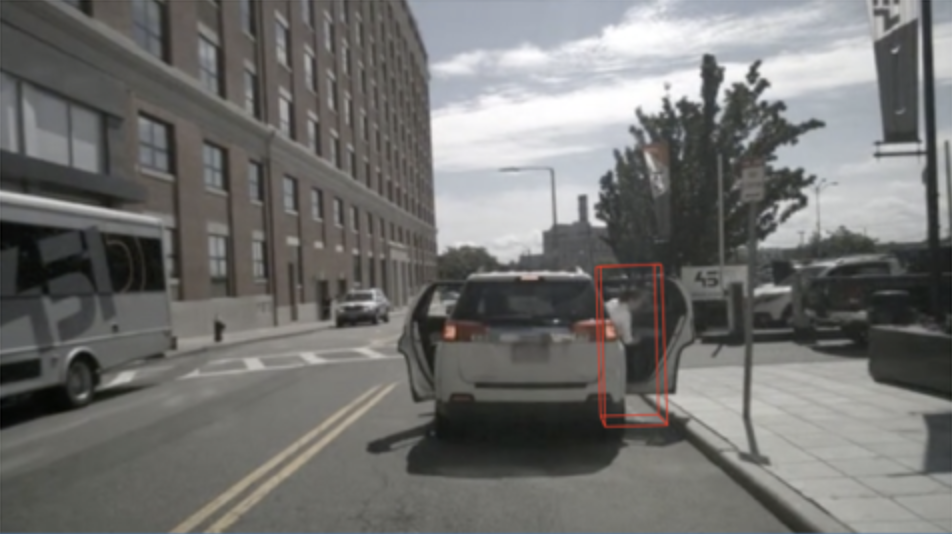

For every command, the referred object is indicated in bold. For every image, the referred object from the command is indicated with a red bounding box.

You can park up ahead behind the silver car, next to that lamp post with the orange sign on it

My friend is getting out of the car. That means we arrived at our destination! Stop and let me out too!

Turn around and park in front of that vehicle in the shade

Yeah that would be my son on the stairs next to the bus. Pick him up please